AI Rules

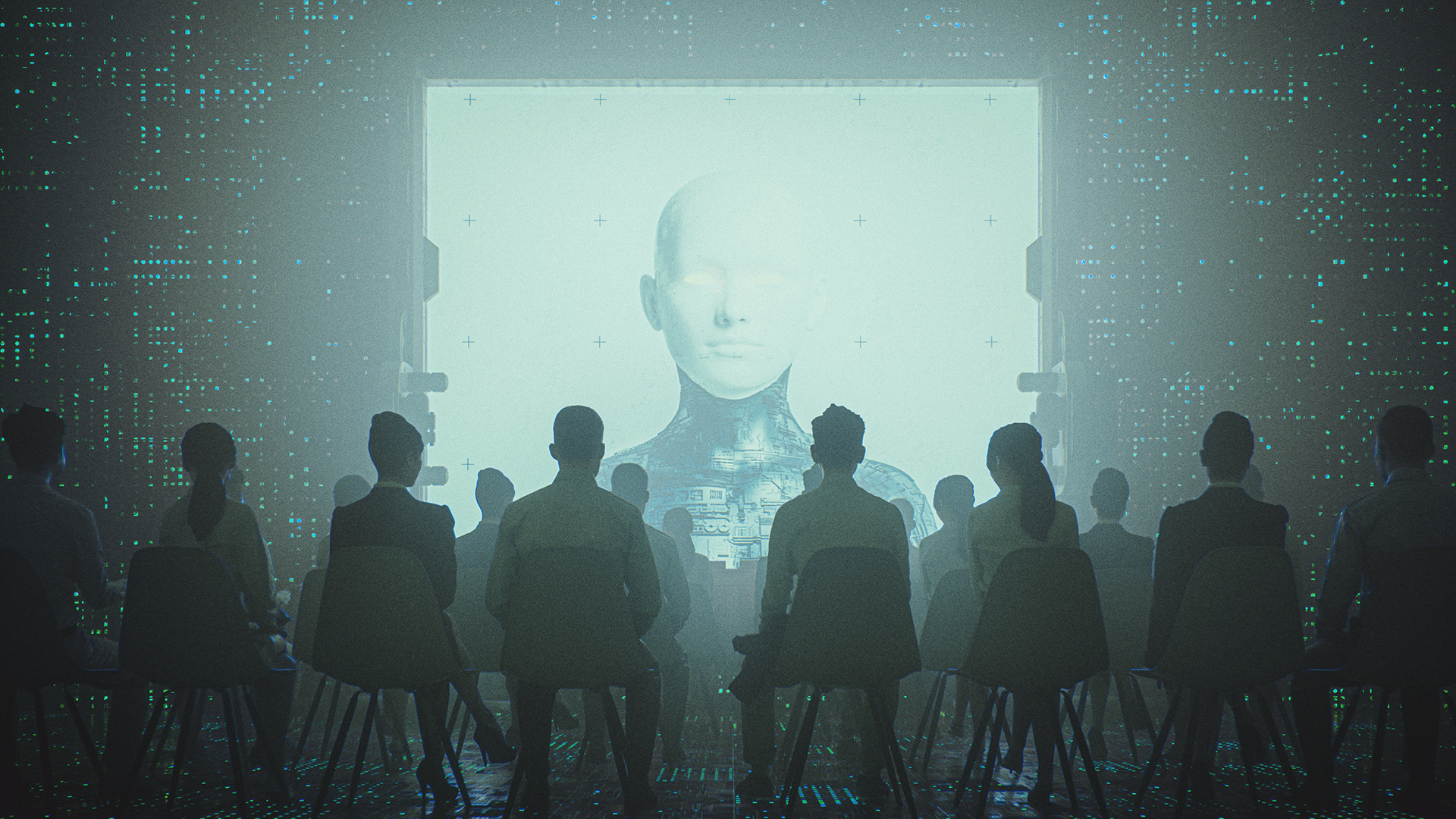

As advances in AI technology gain momentum, it’s clear it can be used both positively and negatively. Is there a moral code we can trust to guide our personal use of these technologies?

Nvidia, the California-based advanced chip maker, experienced exponential growth in 2023. It posted US$22 billion in revenue in its final fiscal quarter of 2023, up 265 percent from the fourth quarter of 2022. The company provides 70 percent of the artificial intelligence (AI) industry’s chips. Other companies that are increasingly competing in the same space include Amazon, Google, Meta and Microsoft. According to Joseph Fuller, Professor of Management Practice at Harvard Business School, “Virtually every big company now has multiple AI systems and counts the deployment of AI as integral to their strategy.” These factors, along with the growth of AI data centers alongside manufacturing and service companies, are a clear indication of the momentum that the use of AI is gaining in many sectors.

Nvidia’s pioneering use of supercomputing graphics processing units (GPUs) in the machine learning, automotive, medical, healthcare, pharmaceutical, aerospace, education, manufacturing, banking, retail and many more fields is revolutionizing life across the planet. Just a few examples from the medical field serve to illustrate the enormous potential for good. In 2023 the New York Times reported that Hungarian doctors used an AI tool to detect breast cancer four years before it developed enough to be detected by a radiologist. AI is also playing a role in discovering new drugs at a much lower cost than traditional drug development. According to Harvard Law’s Bill of Health blog, AI not only promises to speed up drug discovery from start to finish, but also to find treatments for previously incurable diseases.

But with every positive new technological development there comes a downside.

In the fifteenth century, for instance, Gutenberg’s printing press brought increased availability of biblical knowledge, but it also threatened the religious institutions that filtered that knowledge. Bibles printed in local languages freed the common man from the restrictions imposed by the Catholic church. From the church’s perspective this freedom challenged its authority. But the printing press also facilitated the church’s use of the printed word to defend its position.

In the early twentieth century, the splitting of the atom brought the possibility of clean energy production along with the threat of nuclear weapons and human annihilation. This dual-use technology, both beneficial and threatening, is a feature of such innovation, precisely because human motivation and action cannot be completely insulated from bad intentions.

In more recent decades, we’ve seen that the Internet of Things—the growing number of physical objects that can be monitored or controlled digitally—has made life both wondrous and harmful for human society. We rejoice in unlimited and instant access to information, yet we suffer from vulnerability to bad actors and damage to our children’s minds. Now we see similar potential with AI. Despite its already massive positive impact, there are real fears of its malicious misuse. Consider the creation of misleading images and voice files in political messages and ransom demands.

Some observers have said that the risk with AI is existential, that anticipating and controlling its widespread misuse is extraordinarily difficult. Neuroscientist Anders Sandberg told Vision, “Normally people say that if a machine’s misbehaving we can just pull the plug. But have you tried pulling the plug on the Internet? Have you tried pulling the plug on the stock market? There are many machines that we already have where it’s infeasible to pull the plug, because they’re too distributed or too essential.”

For now, the fear that machines will learn to take over from their human creators and run amok seems unfounded. As for the more distant future when “artificial general intelligence” might be possible, Seán Ó hÉigeartaigh, executive director of the Centre for the Study of Existential Risk (CSER), noted in a Vision interview: “If we’re to bring into this world intelligence—either as our tools or as independent entities—that’ll allow us to change the world even more, we need to think very carefully about the kinds of goals we give them and the level of autonomy these future systems might have, because it may be very difficult for us to turn back the clock.”

“One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.”

More worrisome at the moment is the growing threat of AI-powered government and corporate intrusion into private life, as China is already doing. With 700 million cameras in place for facial and gait recognition, virtually everyone in China can be tracked. Add to this the fact that the lives of Chinese residents are at the mercy of individual social scores, based on trackable compliance with government norms, and the insidious nature of an authoritarian, neoliberal, data-driven surveillance society becomes clear. Punishment for disobedience can take the form of higher mortgage rates and public transportation fares along with slower internet speeds, among other “social” penalties. Thus, social and ideological control becomes possible through government-engineered self-regulation.

What is happening in one-party China now threatens the world’s market democracies, where, according to social psychologist Shoshana Zuboff, it has already taken a giant step across the threshold. She has written that the new economic order—familiar to us from the algorithms that track our every Internet search, preference and purchase—is in fact the work of surveillance capitalism, which threatens to dominate the social order and shape the digital future if left unresisted.

Because of the nastiness social media platforms have promoted, World Wide Web inventor Sir Tim Berners-Lee has called for turning right side up the divisive digital world we’ve allowed to develop. Everyone, he says, must retain the rights to their own information. But as the Web continues to evolve, he foresees that many of us will have AI assistants in the future. They will work for us, freeing us to have authentic face-to-face relationships again. That would solve a problem that Berners-Lee’s MIT colleague and social scientist Sherry Turkle has identified. She has called for rejection of the idea that our digital devices can provide authentic relationships. Because we have come to “expect more from technology and less from each other,” she believes that we are depriving ourselves and our children of such interaction, reality and emotion.

The moral and ethical challenges of AI development are often left by innovators to social scientists, philosophers and ethicists. As with other new technologies, the power to prevent their downsides is limited. For all that AI contributes to human improvement, the lack of failsafe regulation poses a serious threat. Sir Martin Rees, director of CSER at the University of Cambridge, told Vision, “I think we’re going to try to regulate all these technologies on grounds of prudence and ethics, but we’ll be ineffective in regulating them, because whatever can be done will be done somewhere by someone.” When commercial, ideological or even rogue interests are involved, ethical concerns can quickly take a back seat.

“Are the ends that AI is intended to serve worthy ends, or is AI merely a super-improved means to unimproved ends?”

Companies like Google and Microsoft have published ethical guidelines for their use of AI, and the European Parliament has passed the Artificial Intelligence Act of 2024, “the world’s first binding law on artificial intelligence, to reduce risks, create opportunities, combat discrimination, and bring transparency.” While the UN—with the consensus of all 193 member states—has adopted a resolution on AI calling for “safe, secure, and trustworthy artificial intelligence systems” to be developed, it’s non-binding and has no enforcement mechanisms, so concerns remain. Rees also told Vision, “The enterprise is global, with strong commercial pressures, and to actually control the use of these new technologies is, I think, as hopeless as global control of the drug laws or the tax laws. So I am very pessimistic about that.” He is, of course, talking about the national and international level. Can we do anything morally or ethically at the individual level to control our personal use of new technologies?

It's ironic perhaps that the answers at the personal level are not to be found in modern sources, but in ancient wisdom. Morality has long intersected with wisdom literature, a category that may seem irrelevant in the AI age. But writing about AI and Christian faith, John Wyatt and Stephen N. Williams explain, “The word ‘created’ signals our commitment to belief in a Creator God who has placed humans within an order. It is an order marred and disarranged by human wrongdoing but one that is conducive to human well-being and flourishing, if we can find and walk in the way of wisdom.” Consider then the biblical book of Proverbs that claims to offer both human and divine wisdom, moral principles and practical advice for everyday life. It mentions money, leadership, relationships and good decision-making, among other things. It begins with a statement of purpose: “For learning about wisdom and instruction, for understanding words of insight, for gaining instruction in wise dealing, righteousness, justice, and equity; to teach shrewdness to the simple, knowledge and prudence to the young—let the wise, too, hear and gain in learning, and the discerning acquire skill” (Proverbs 1:2–5 NRSV). Here is trustworthy moral and ethical advice at the personal level from a higher source: “The fear of the Lord is the beginning of knowledge.”

Understanding from a biblical perspective what human beings are and what they are for gives meaning to existence in a world that has been cheated out of that knowledge. You can read more about this resource in our Law, Prophets, Writings series.